Recap from Day 006

In day 006, we learned that When you are working on a classification problem, begin by determining whether the problem is binary or multiclass. We then went further and looked at what binary or multiclass is with examples. We concluded with some common classification algorithms.

Today, we’ll continue with Common Classification Algorithms.

Common Classification Algorithms continued

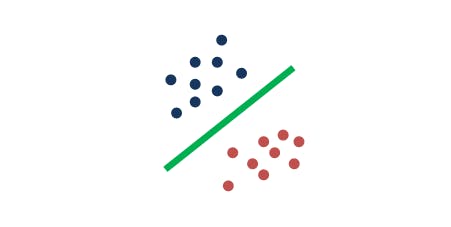

Support Vector Machine (SVM)

“Given a set of training examples, each marked as belonging to one or the other of two categories, an SVM training algorithm builds a model that assigns new examples to one category or the other”. “SVM classifies data by finding the linear decision boundary (hyperplane) that separates all data points of one class from those of the other class”.

SVM

SVM

“An SVM model is a representation of the examples as points in space, mapped so that the examples of the separate categories are divided by a clear gap that is as wide as possible. New examples are then mapped into that same space and predicted to belong to a category based on which side of the gap they fall”.

Best Used…

For data that has exactly two classes (you can also use it for multiclass classification with a technique called error-correcting output codes)

For high-dimensional, nonlinearly separable data

When you need a classifier that’s simple, easy to interpret, and accurate Common

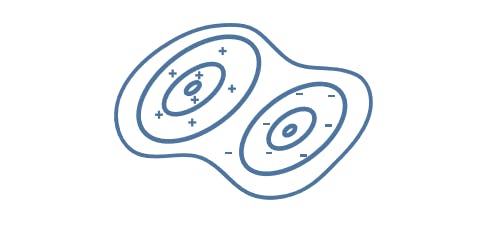

Naive Bayes

“Naive Bayes classifier assumes that the presence of a particular feature in a class is unrelated to the presence of any other feature. Bayes classifiers assume that the value of a particular feature is independent) of the value of any other feature, given the class variable.” We want to know the emotion of a piece of music based on its lyrics, we can use a Naive Bayes Classifier.

Naive Bayes: Mathworks — 90221_80827v00_machine_learning_section4_ebook_v03.pdf

Naive Bayes: Mathworks — 90221_80827v00_machine_learning_section4_ebook_v03.pdf

Naive Bayes classifies new data based on the highest probability of its belonging to a particular class.

Best Used…

For a small dataset containing many parameters

When you need a classifier that’s easy to interpret

When the model will encounter scenarios that weren’t in the training data, as is the case with many financial and medical applications

You made it to the end of day 007. Tomorrow, we’ll continue with more commonly used classification algorithms. Thank you for taking time out of your schedule and allowing me to be your guide on this journey.

Refrence: Mathworks 90221_80827v00_machine_learning_section4_ebook_v03.pdf

*https://en.wikipedia.org/wiki/Support_vector_machine*

*https://www.analyticsvidhya.com/blog/2017/09/naive-bayes-explained/*